Change Store Email Addresses - Mageplaza

How to Configure Robots.txt in Magento 2

Vinh Jacker | 06-22-2016

As you know, configuring robot.txt is important to any website that is working on a site’s SEO. Particularly, when you configure the sitemap to allow search engines to index your store, it is necessary to give web crawlers the instructions in the robot.txt file to avoid indexing the disallowed sites. The robot.txt file, that resides in the root of your Magento installation, is directive that search engines such as Google, Yahoo, Bing can recognize and track easily. In this post, I will introduce the guides to configure the robot.txt file so that it works well with your site.

What is Robots.txt in Magento 2?

The robots.txt file instructs web crawlers to know where to index your website and where to skip. Defining this website robots - website crawlers relationship will help you optimize your website’s ranking. Sometimes you need it to identify and avoid indexing particular parts, which can be done by configuration. It is your decision to use the default settings or set custom instructions for each search engine.

Steps to Configure Magento 2 robots.txt file

-

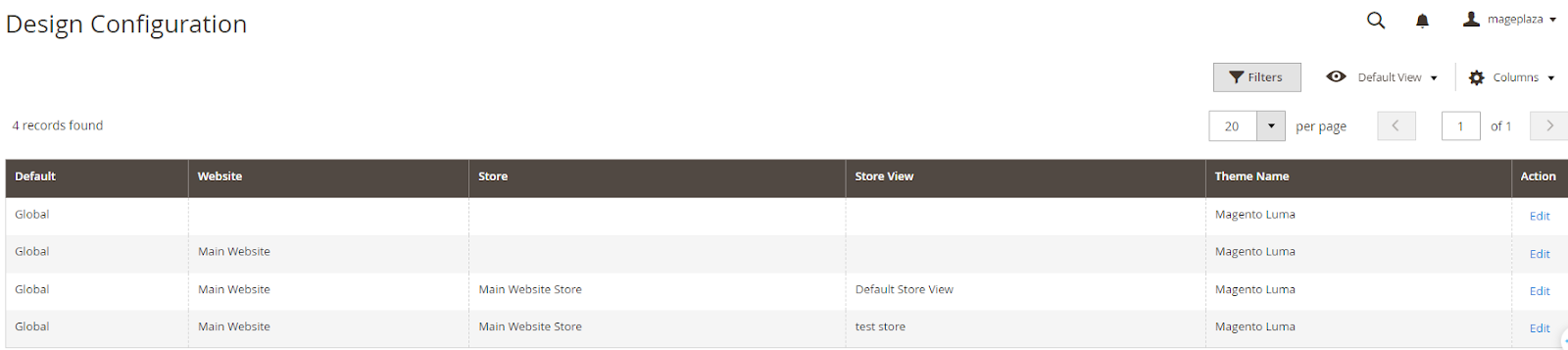

Log in to your

Magento 2 Admin Panel. -

Click

Content. In theDesignsection, selectConfiguration. -

Press edit to fix the

Global Design Configuration.

- Open the

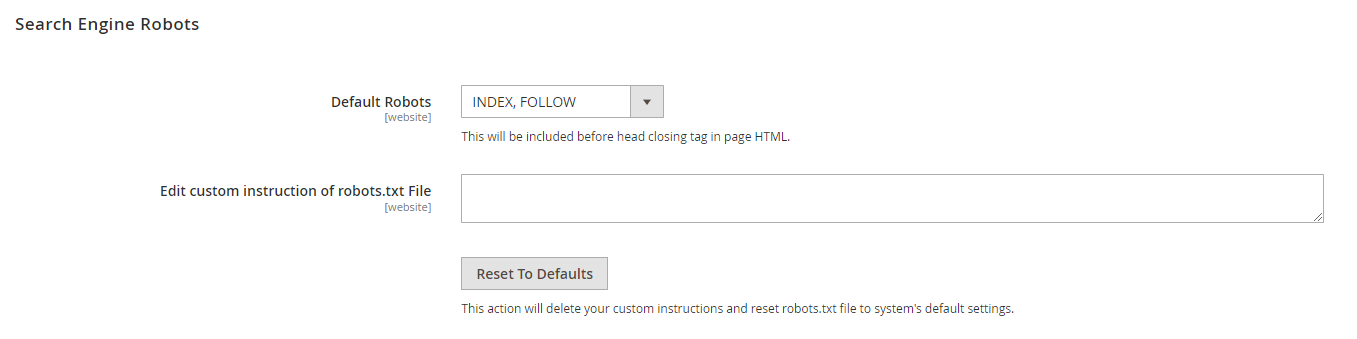

Search Engine Robotssection, and continue with the following:

-

In Default Robots, select one of the following:

-

INDEX, FOLLOW: Instructs search engine crawlers to index the store and recheck for changes.

-

NOINDEX, FOLLOW: Prevents indexing but allows to recheck for updates.

-

INDEX, NOFOLLOW: Indexes the store once without rechecking changes.

-

NOINDEX, NOFOLLOW: Blocks indexing and avoids further checks.

-

-

In the

Edit Custom instruction of robots.txt Filefield, enter custom instructions if needed. -

In the

Reset to Defaultsfield, click onReset to Defaultbutton if you need to restore the default instructions.

- When complete, click Save Config to apply your changes.

Magento 2 Robots.txt Examples

You are also able to hide your pages from the website crawlers by setting custom instructions as follows:

- Allows Full Access

User-agent:*

Disallow:

- Disallows Access to All Folders

User-agent:*

Disallow: /

Magento 2 Default Robots.txt

Disallow: /lib/

Disallow: /*.php$

Disallow: /pkginfo/

Disallow: /report/

Disallow: /var/

Disallow: /catalog/

Disallow: /customer/

Disallow: /sendfriend/

Disallow: /review/

Disallow: /*SID=

Disallow: /*?

# Disable checkout & customer account

Disallow: /checkout/

Disallow: /onestepcheckout/

Disallow: /customer/

Disallow: /customer/account/

Disallow: /customer/account/login/

# Disable Search pages

Disallow: /catalogsearch/

Disallow: /catalog/product_compare/

Disallow: /catalog/category/view/

Disallow: /catalog/product/view/

# Disable common folders

Disallow: /app/

Disallow: /bin/

Disallow: /dev/

Disallow: /lib/

Disallow: /phpserver/

Disallow: /pub/

# Disable Tag & Review (Avoid duplicate content)

Disallow: /tag/

Disallow: /review/

# Common files

Disallow: /composer.json

Disallow: /composer.lock

Disallow: /CONTRIBUTING.md

Disallow: /CONTRIBUTOR_LICENSE_AGREEMENT.html

Disallow: /COPYING.txt

Disallow: /Gruntfile.js

Disallow: /LICENSE.txt

Disallow: /LICENSE_AFL.txt

Disallow: /nginx.conf.sample

Disallow: /package.json

Disallow: /php.ini.sample

Disallow: /RELEASE_NOTES.txt

# Disable sorting (Avoid duplicate content)

Disallow: /*?*product_list_mode=

Disallow: /*?*product_list_order=

Disallow: /*?*product_list_limit=

Disallow: /*?*product_list_dir=

# Disable version control folders and others

Disallow: /*.git

Disallow: /*.CVS

Disallow: /*.Zip$

Disallow: /*.Svn$

Disallow: /*.Idea$

Disallow: /*.Sql$

Disallow: /*.Tgz$

More Robots.txt examples

Block Google bot from a folder

User-agent: Googlebot

Disallow: /subfolder/

Block Google bot from a page

User-agent: Googlebot

Disallow: /subfolder/page-url.html

Common Web crawlers (Bots) {#common-web-crawlers-(bots)}

Here are some common bots in the internet.

User-agent: Googlebot

User-agent: Googlebot-Image/1.0

User-agent: Googlebot-Video/1.0

User-agent: Bingbot

User-agent: Slurp # Yahoo

User-agent: DuckDuckBot

User-agent: Baiduspider

User-agent: YandexBot

User-agent: facebot # Facebook

User-agent: ia_archiver # Alexa

How to add a sitemap to the robots.txt file in Magento 2?

Much like the robots.txt file, the Magento sitemap plays a crucial role in optimizing your website for search engines. It facilitates a more thorough analysis of your website links by search engines. As robots.txt provides instructions on what to analyze, it is advisable to include information about the sitemap in this file.

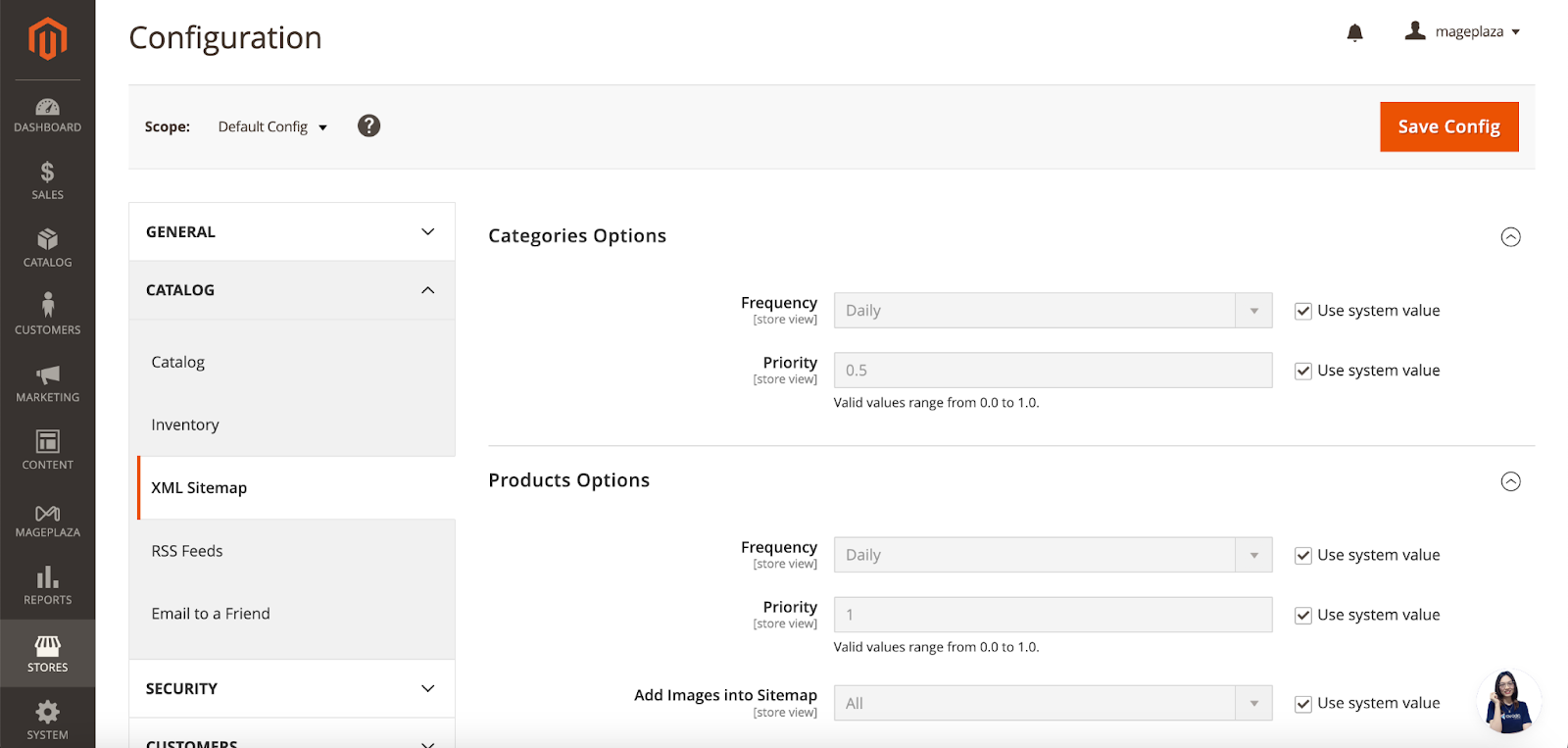

To integrate a sitemap into Magento’s robots.txt file, follow these steps:

Go to Store > Configuration > Catalog > XML Sitemap and locate the Search Engine Submission Settings section.

Activate the Submission to Robots.txt option.

If you wish to incorporate a custom XML sitemap into robots.txt, proceed to Content >Design > Configuration > select a website > Search Engine Robots. Then, append a custom sitemap to the Edit custom instruction of the robot.txt File field.

Deploying Best Practices for Robots.txt

For optimal search engine performance, it is imperative to follow these best practices.

Disallow Irrelevant Pages

To enhance search engine optimization (SEO), it’s crucial to specify which pages or directories search engines should not index. In your robots.txt file, use the “Disallow” directive to prevent crawlers from accessing irrelevant content. For instance, if you have pages with duplicate or thin content, disallow them to avoid diluting your site’s overall quality.

Include a Sitemap

By adding your XML sitemap URL to the robots.txt file, you can find and index all relevant pages on your site. This ensures that search engines can efficiently navigate through your content, improving overall visibility.

Restrict Access to Sensitive Areas

Certain directories, such as admin panels or CMS directories, contain secret information. To safeguard these areas, use the “Disallow” directive in your robots.txt file. By doing so, you prevent search engines from inadvertently indexing sensitive content, maintaining security and privacy.

Optimize Crawl Budget

Crawling resources are finite, and search engine bots allocate a crawl budget to each site. To make the most of this budget, specify which areas should not be crawled frequently. For instance, review pages or product comparison pages may not require frequent indexing. Use the robots.txt file to limit excessive crawling of non-essential content, ensuring that important pages receive adequate attention.

Regularly Check for Errors

Even the best-configured robots.txt files can face issues. Regularly monitor your file for syntax errors or incorrect directives. Tools like Google Search Console can help identify any issues. By promptly solving errors, you maintain an effective robots.txt setup and enhance your site’s overall SEO performance.

FAQs for Robots.txt in Magento 2

1. What role does the Googlebot user agent play in configuring robots.txt within Magento 2?

The Googlebot user agent plays a crucial role in how your Magento website interacts with bots, particularly the Google bot.

By configuring your robots.txt file, you gain control over what content the bot is allowed or disallowed from indexing on your site. However, it’s essential to recognize that there are two distinct user agents involved:

-

Googlebot User Agent: Responsible for crawling and indexing your website.

-

Page User Agent: Represent the user agent or web crawler responsible for accessing and rendering your web pages. When configuring your robots.txt file, consider both the Google bot user agent and a page user agent to make sure that your website’s content is appropriately indexed and displayed.

2. What does “Access User Agent” mean in robots.txt?

An “Access User Agent” in robots.txt is related to a specific user agent or web crawler that you intentionally allow access to your website. Essentially, it’s a way to grant permission to certain bots while restricting others. By using directives, you can specify which user agents are permitted to access specific parts of your site. For example, if you want to allow Googlebot but disallow other crawlers, you can define rules accordingly.

3. Is it recommended that checkout pages in the robots.txt file be disallowed?

Implementing the checkout pages disallow command in your robots.txt file is a recommended practice. Doing so helps prevent web crawlers from accessing sensitive user data during the checkout process. To achieve this, add the following line to your robots.txt file:

Disallow: /checkout/

4. How can I manage access for specific user agents in robots.txt?

To manage access for specific user agents in your robots.txt file, you need to use the User-agent directive. For instance, if you want to disallow the user agent called BadBot from crawling your site, run the lines below:

User-agent: BadBot Disallow: /

5. What’s the significance of allowing catalog search pages in robots.txt?

It would be best to allow search engines to index catalog search pages. Fortunately, Magento’s default settings typically permit this, eliminating the necessity for adding specific directives related to catalog search pages in the robots.txt file. Ensure that your robots.txt file does not contain any ‘disallow’ rules about catalog search URLs.

6. What is the “folders user agent” in Magento 2 robots.txt?

In Magento 2, the “folders user agent” directive pertains to a directive within the robots.txt file. This directive specifies which user agents (such as search engine bots) are allowed or disallowed from accessing specific folders or directories on your Magento website.

It’s a helpful way to control bot access and ensure that certain sensitive or internal areas remain off-limits to crawlers. By customizing this directive, you can fine-tune the behavior of web robots in relation to different parts of your site.

7. What are the default directives in Magento’s robots.txt file?

By default, Magento’s robots.txt file allows most search engine robots to access the whole website. However, it’s crucial to recognize that these default instructions serve as a starting point. As a website owner, you should review and customize these settings depended on your specific needs.

Customization allows you to optimize visibility for search engines while protecting sensitive or irrelevant content from being indexed. Remember that the robots.txt file is publicly accessible, so it’s not suitable for hiding confidential information. Regular review and adjustment are essential to adjust the instructions to fit your site’s requirements.

8. Does Robots.txt affect website performance in Magento 2?

While Robots.txt itself doesn’t directly impact website performance, improper configuration leading to excessive crawling restrictions can indirectly affect crawl budget and site performance.

9. What happens if I encounter errors in my Robots.txt file in Magento 2?

If you encounter errors in your Robots.txt file in Magento 2, such as syntax errors or incorrect directives, it may adversely affect search engine crawling and indexing of your website. It’s important to regularly monitor and validate your Robots.txt file using tools like Google’s Robots.txt Tester to identify and rectify any errors promptly.

10. Is it possible to set crawl delays for search engine bots using Robots.txt in Magento 2?

Yes, you can set crawl delays for search engine bots using the Robots.txt file in Magento 2 by use the “crawl-delay” directive followed by the number of seconds you wish to delay crawling. This allows you to control the rate at which search engine crawlers access your website, helping to manage server load and bandwidth usage.

The bottom line

Configuring Robots.txt is the first step to optimize your search engine rankings, as it enables the search engines to identify which pages to index or not. After that, you can take a look at this guide on how to configure Magento 2 sitemap. If you want a hassle-free solution that works right out of the box for your store with easy installation, check our SEO extension out. In case you need more help with this, contact us and we will handle the rest.