The Complete Guide to UTM Parameters & Google Analytics for Magento website

Master UTM parameters and Google Analytics for Magento. Track traffic sources, measure campaign performance, and practical tips to use it for better business decisions.

Duplicate content is considered constant anxiety for a lot of site owners.

Learn almost anything about it, and you might believe that your website is full of duplicate content problems. A Google penalty might be imposed some days.

Today’s post will give you a thorough understanding of duplicated content, why it happens, how it affects your Magento 2 website, and how to fix it effectively.

Are you ready to begin? Let’s dive right in.

Duplicate Content is the same content that appears in many places on the Internet. When multiple versions are the same, it is very difficult for the search engine to distinguish which version relates more closely to the user’s search query. Therefore, search engines will rarely display duplicate content pages, and instead choose the articles that are likely to be the original version or choose the most relevant version.

Duplicate content can arise from various sources, and addressing each cause requires specific solutions:

Generally, Google avoids ranking pages that contain identical content. Google explicitly emphasizes its effort to prioritize pages with unique information, and having pages on a website lacking distinctive content can negatively impact search engine rankings. There are three primary issues associated with websites that feature substantial duplicate content.

Reduced Organic Traffic: Google is disinclined to rank pages that incorporate copied content from other pages in its index, even if the duplication occurs within the same website. If multiple pages on a site share similar content, determining the original page becomes challenging for Google, leading to difficulties in ranking all such pages.

Rare Occurrence of Penalty: Google acknowledges that duplicate content might result in a penalty or the complete removal of a website from its index. However, such penalties are extremely uncommon and are typically imposed only when a website intentionally scrapes or duplicates content from other sources. For most cases of internal duplicate content, concerns about receiving a “duplicate content penalty” are generally unwarranted.

Limited Indexed Pages: Particularly crucial for websites with a large number of pages, such as e-commerce sites, Google may not only lower the ranking of duplicate content but also refuse to index it altogether. Consequently, if certain pages on a website are not being indexed, it may be attributed to the crawl budget being consumed by duplicate content, leading to the exclusion of those pages from the index

Although duplicate content is often visible to our naked eyes, it’s hidden in the code of a site. That’s the reason why you should depend on software to check for duplicate content.

There are many SEO audit tools that can identify different URLs with identical content and suggest how to fix them. The tools can also warn you about general duplicate content tips.

As normal, site audit tools recognize duplicate content via meta descriptions and titles, creating an exportable list of URLs to make identifying and fixing the issue easier. Fixing these technical problems will help boost meta-tag SEO, which brings in higher click-through rates from search engine results pages.

Off-site duplicate content exists on various websites, so they’re more difficult to find.

To ensure you’re uploading content that already stays on another website, consider utilizing a plagiarism tool before releasing. This is especially crucial if you’re partnering with outsourced writers or new team members who may realize the significance of unique content.

Moreover, you can leverage that tool to check whether other websites are not copying your content. There are smart tools that scan the web to identify instances of content copied from your website.

How can the problem be resolved? To prevent duplicate content issues from impacting your search rankings, follow these steps:

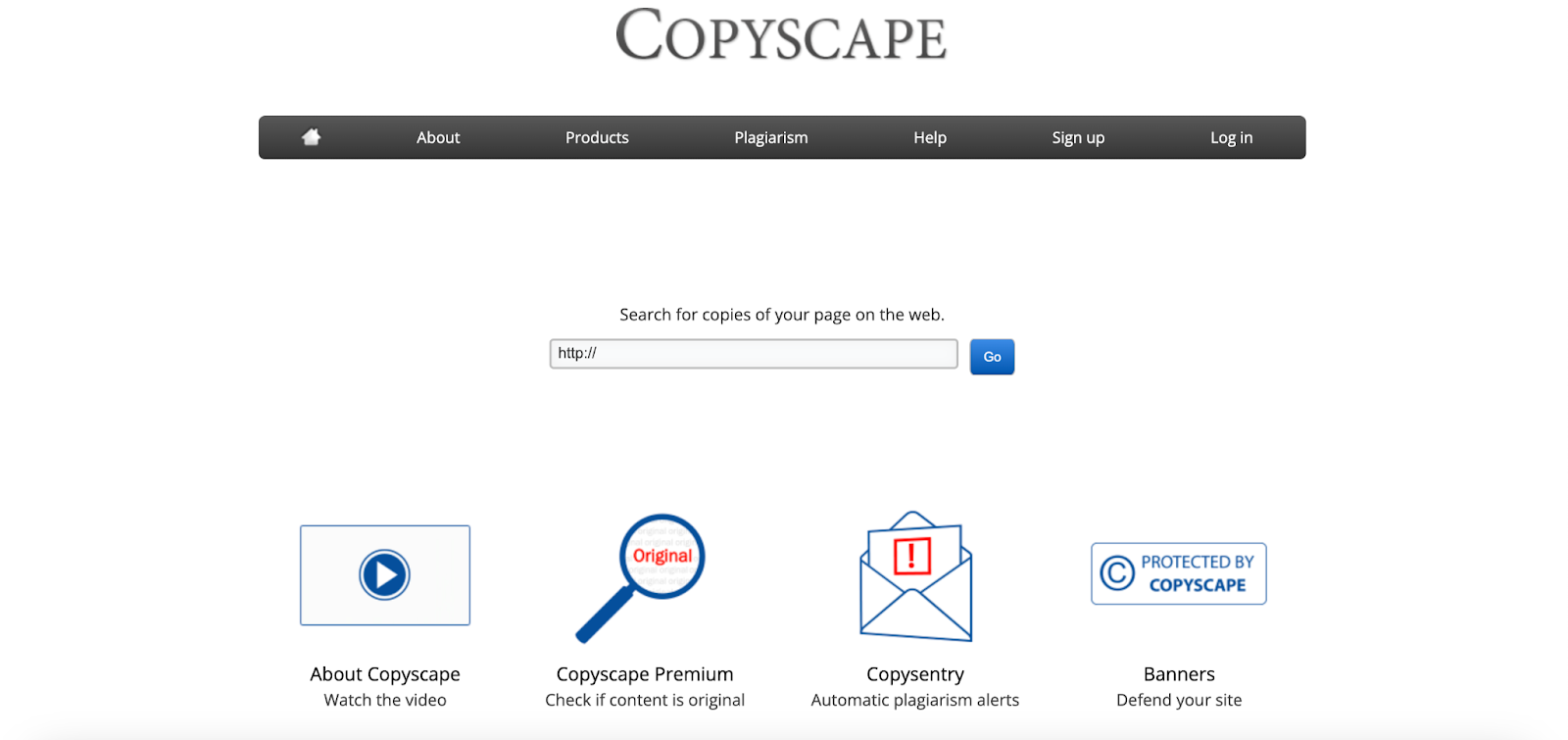

Employ tools like Copyscape to scrutinize your website content for potential copies that may be unknown to you.

Copyscape, a reliable platform, provides an on-demand plagiarism-checking service. A free option is also available, checking the web for duplicates of already-published content.

For content acquired from agencies or freelance writers, use Copyscape Premium to authenticate the originality before publishing. Additionally, consider Copysentry for automatic alerts when copies of your content appear online.

Safeguard your web content from plagiarism by deactivating the copy command and right-click menu.

WordPress users can use a plugin like WP Content Copy Protection & No Right Click for this purpose. Alternatively, website owners may manually disable these features using CSS and JavaScript.

Distinguish the original content from duplicates by incorporating the canonical tag.

Add the “rel=canonical” tag to all intentionally created duplicate pages, containing the link to the original content.

Canonical tags guide search engines to the original source of duplicate content. For those using content syndication services, ensure the publishing site also employs canonical tags.

Conduct an internal check for existing duplicates on your website using SEO audit tools. Ahrefs offers a Site Audit tool that identifies both “near duplicates” and “exact duplicates.”

For exact duplicates, decide whether to use link canonicalization or delete the copies. For near duplicates, particularly those with similar headers, title tags, and meta descriptions, consider paraphrasing or rewriting the problematic sections.

Direct users from an old URL to a new, updated URL using a 301 redirect. This is beneficial during domain migrations or when modifying existing post permalinks.

Execute 301 redirects through your website’s .htaccess file, accessible from your hosting platform. For specific instructions, contact your web hosting service provider.

Prevent duplicate content by hiring established content writers known for consistently delivering 100% original content.

Experienced content experts avoid shortcuts like paraphrasing or article spinning. While they may draw inspiration, they always create drafts using their own words from start to finish.

Duplicate content is always a painful problem for SEO, so it is necessary to minimize the risk of this problem. Invest in your content with useful information, differentiate yourself from the rest of the site and ask for copyright issues when another website wants to get your information. Hopefully, through this article, you already know what duplicate content is about and pay more attention to this issue.

With Mageplaza SEO extension, by adding Canonical URL Meta, those duplicate content will be automatically prevented and will help to boost your SEO performance outstandingly.